Measure step execution time

It is possible to configure tests in Loadero to record the execution time of certain steps, or even entire sequences of steps. This is achieved by using the "Time Execution" command. These measurements are referred to as timecards, for short.

In order to take advantage of this feature, you will need to supply instructions to the test script on exactly what actions need to have their execution time measured. If this information is not specified in the script, step execution time measurements will not be performed. This sets timecards apart from other performance metric measurements that may be taken during the test, such as machine statistics, WebRTC statistics, and MOS scores, which are all measured automatically without any necessary input within the script.

When calling this command in the script, two key properties should be provided:

-

The name of the timecard. By giving the timecard a name, you will have an easier time discerning which measurement correlates to which actions. For example, if you are measuring the time it takes for the next view to load after pressing a "Login" button, you could name the timecard

login,home_page_appeared_in,delay_after_pressing_login, etc. We suggest choosing a unified approach to how timecards are named and sticking to it, so that interpreting results is easier. -

The sequence of actions you want to time (which can also just be one action). A timer will be initialized before the sequence begins execution, and the timer will be stopped once the sequence of actions finishes. After the timer is stopped, a corresponding timecard is generated.

Refer to the script command's documentation for instructions and script examples on how to set up these measurements in your framework of choice - Nightwatch.js, TestUI or Py-TestUI.

Timecard names do not have to be unique! If you are measuring the same sequence of actions multiple times within the same test (e.g., to monitor whether performance gets worse over time), you can re-use the same name over and over, which makes looping a viable mechanism in these tests.

At the end of the test, timecards will be grouped by name. This allows statistics to be calculated for a whole set of timecards, as long as their name is the same. As such, information will be available not only for what the duration was for each individual timecard, but also what the statistics - average, maximum, median, etc. - were for all timecards of this name.

However, these aggregated statistics are calculated only for the whole test run, not for each individual participant. This means that if you have 100 participants, each with 100 identically named timecards, Loadero will provide calculated statistics for the timecard across the entire run - across 10 000 measurements in total. These statistics are not calculated in the scope of an individual participant. However, it is possible to calculate these things yourself by using the Loadero API, and then writing a locally executed script that will calculate these statistics for you.

Timecards may contain other timecards - referred to as nested timecards. This can be done by having the sequence of actions passed to the "Time Execution" command contain another "Time Execution" command. Using nested timecards allows you to both have more granular details about how long specific parts took, while also being able to view how long the larger process took as a whole.

Where to find measurements

If you already have an idea of performance requirements and how long certain actions should be allowed to take, you can set up automatic assertions that will execute at the end of the test. If these assertions pass, then you will already know that those particular execution times do not need to be inspected. If they fail - then so will the participant, which will let you know that the results should be analyzed in detail. Read more about setting these assertions up here.

Participant Selenium log

Whenever a participant finishes executing the "Time Execution" command, the timecard is output to the Selenium log. More info about how this output looks is provided in the documentation of the command.

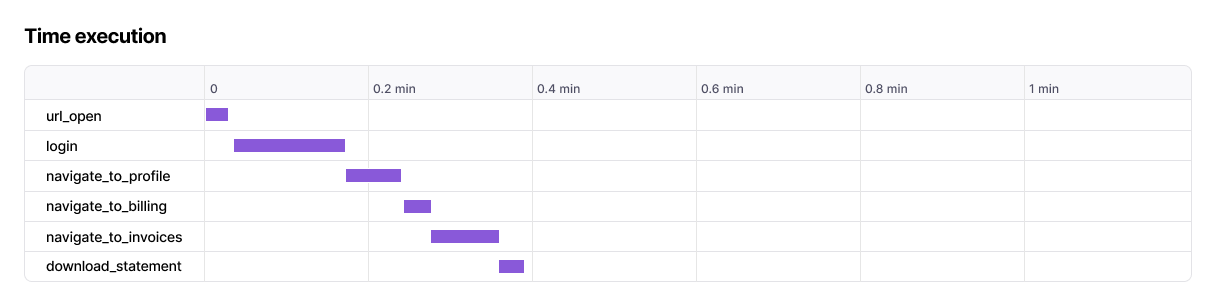

Participant Gantt chart

All timecards generated during a participant's runtime will be aggregated into a Gantt chart. This chart can be found at the bottom of the participant's summary page.

If the participant generated nested timecards, the Gantt chart will use indentation to indicate that the timecard is a component of another timecard. A timecard that contains nested timecards can also be collapsed, to hide details about the nested timecards and only show the total duration of the higher-level timecard.

Run report metric tables

The run report's summary page groups

timecards of the same name together, allowing you to see additional statistics,

such as the average, 75th percentile, or maximum duration of that timecard (as

well as other aggregate functions). E.g., if two participants have created a

timecard of the name open_url, and participant A measured it as 3000 ms,

whereas participant B measured it as 5000 ms, the run report will be able to

tell you that the average duration of the open_url timecard was 4000 ms across

the entire test run.

How to perform custom calculations on measurements

If you need to make some custom calculations for statistics that are not

provided by Loadero already, you can use the

Loadero API, or one of our API

clients - Loadero Python or

Loadero Java - to iterate across all the

results of a test, retrieve all timecards generated, perform the necessary

calculations and output them to a .csv file in a custom-made script.

For example, you have executed 100-participant test where the participants

are executing a search function a total of 100 times per participant. They are

all measuring it under the timecard search_time. Those are 10 000 timecards

generated during the test as a whole. It could be necessary not to just

find out what the median execution time of the function was across all 10 000

timecards, but also how long the 1st iteration took for every participant.

Looking at 100 different result reports manually to find this information would

be impractical. The example below describes how this could be achieved through

the Loadero API.

API usage example

Given that you have generated an API token in your project's settings with the appropriate READ scopes, in order to achieve the objective described in the paragraph above, you can develop a script where you:

-

Retrieve all results of a test run via the endpoint

GET /v2/projects/{projectID}/tests/{testID}/runs/{runID}/results/. This endpoint will return a list of objects, each object represents one result - a participant that executed the test. Store theidfield of all these results. -

Iterate over each

idand call the endpointGET /v2/projects/{projectID}/tests/{testID}/runs/{runID}/results/{resultID}/, whereresultIDis theidyou retrieved in the previous step - what you are currently iterating over. This endpoint will contain adata_syncobject, which itself contains aresult_timecardslist. Each object in thisresult_timecardrepresents one timecard - a step execution time measurement. -

Analyze the

result_timecardto identify the firstsearch_timetimecard of that participant and store that in a data structure, like a list or a dictionary consisting of tuples like{resultID, timecard_value}. Then continue iterating and do the same for every other result ID, to retrieve the firstsearch_timetimecard of the other participants as well. -

Output these timecards to a

.csvfile, or any other format of your liking.